While that process is ongoing, both companies are reportedly in secret discussions to commercialize a new type of advanced AI memory chip known as SOCAMM, or system on chip advanced memory module. NVIDIA is said to be in talks with SK Hynix as well for this memory chip.

Prototypes are currently being exchanged with NVIDIA

The talks center around commercializing this new memory standard. It's viewed by the industry as an advancement over the existing high-bandwidth memory chips that form the backbone of AI accelerators today.

These chips are designed to significantly improve the performance of artificial intelligence supercomputers. SOCAMM has a higher cost-performance ratio, and its module design has a higher number of ports which helps reduce data bottlenecks which remain a challenge in AI computing.

SOCAMM is also detachable, so it will enable data center operators to swap and upgrade memory modules and achieve ongoing performance improvements. Given its compact size, more SOCAMM modules can be installed within the same area for improved high-performance computing.

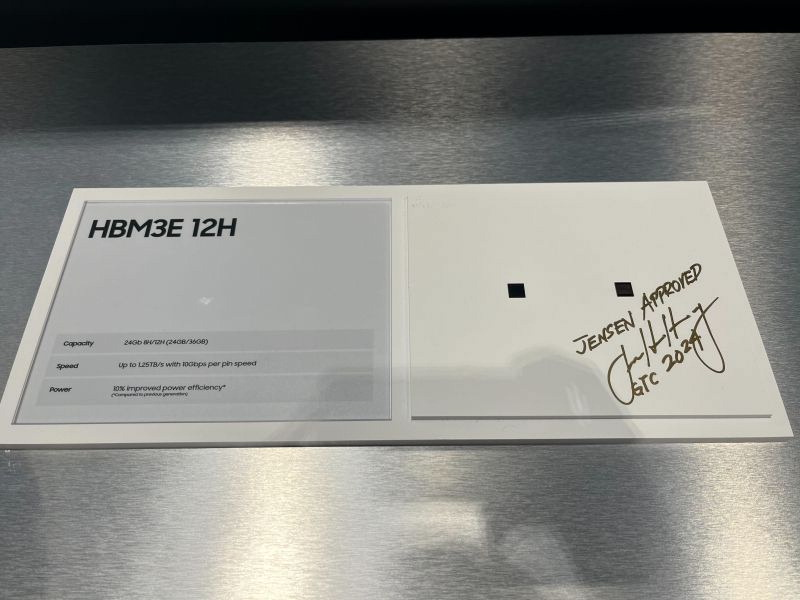

Industry insiders highlight that NVIDIA and memory companies like Samsung are currently working on SOCAMM prototypes and doing performance tests ahead of mass production that's expected to begin before the end of 2025.

This could provide Samsung with another opportunity to reclaim some of the ground that it has lost to SK Hynix in the high-bandwidth memory segment.