For the last couple of years, artificial intelligence or AI has been the buzzword in the technology industry. It gained popularity with the rise of GenAI-powered chatbots, such as Google’s Gemini, Microsoft’s Copilot, and OpenAI’s ChatGPT. In most cases, these chatbots process user requests on cloud-based servers powered by hardware components that specialize in carrying out such tasks, which usually feature high bandwidth memory (HBM). As such, the demand for HBM has also increased massively in the last couple of years.

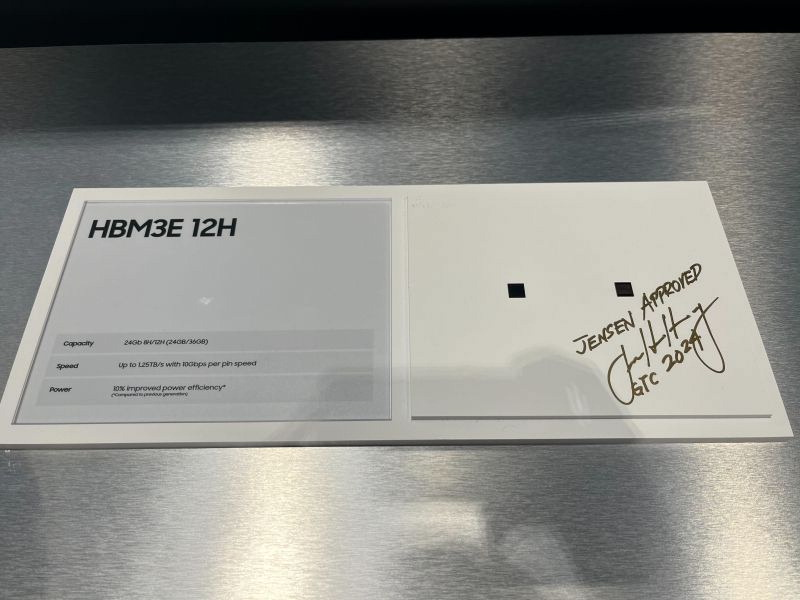

Samsung Electronics, despite being the world’s largest manufacturer of memory chips, has not been doing well in the HBM space, even though it has come up with cutting-edge products, such as the 12-layer HBM3E 12H DRAM. After five consecutive quarters of operating losses, the company incurred losses of over USD 11 billion last year. In the first quarter of this year, it rebounded with an operating profit of USD 1.38 billion. However, it is still behind SK Hynix, which is leading the AI semiconductor market with its latest HBM3E chips.

Samsung creates the HBM Development Team

Well, to take things further, Samsung is making a major change in the company’s HBM department. According to a new report from ET News, Samsung Electronics has combined the various teams it had for the development of HBM, including those for HBM3, HBM3E, and HBM4 into a single team called the HBM Development Team. It also has experts for advanced packaging of HBM who were previously part of the Advanced Packing (AVP) Business Team.

The story continues after the video…

Reportedly, the company has taken this step to make greater strides in the development of HBM, and therefore, capture a bigger pie of the artificial intelligence semiconductor market. The vice president of the Memory Product Planning Team at Samsung Electronics, Sohn Young-Soo, who is also an expert in designing high-performance DRAM, will lead this team, which will focus on research and development of next-generation HBM3, HBM3E, and HBM4 chips.

Samsung could soon supply HBM3E chips to Nvidia

According to previous reports, Samsung’s HBM3E has passed Nvidia’s certification process to be used in the company’s GPUs for powering AI. With that, Samsung should soon start supplying HBM3E chips to Nvidia. That should massively increase the revenue, and therefore, the operating profit of Samsung Electronics, helping it in the journey to become the leader in the HBM and AI semiconductor market. We expect big announcements from the brand soon.

Image Credit: Samsung