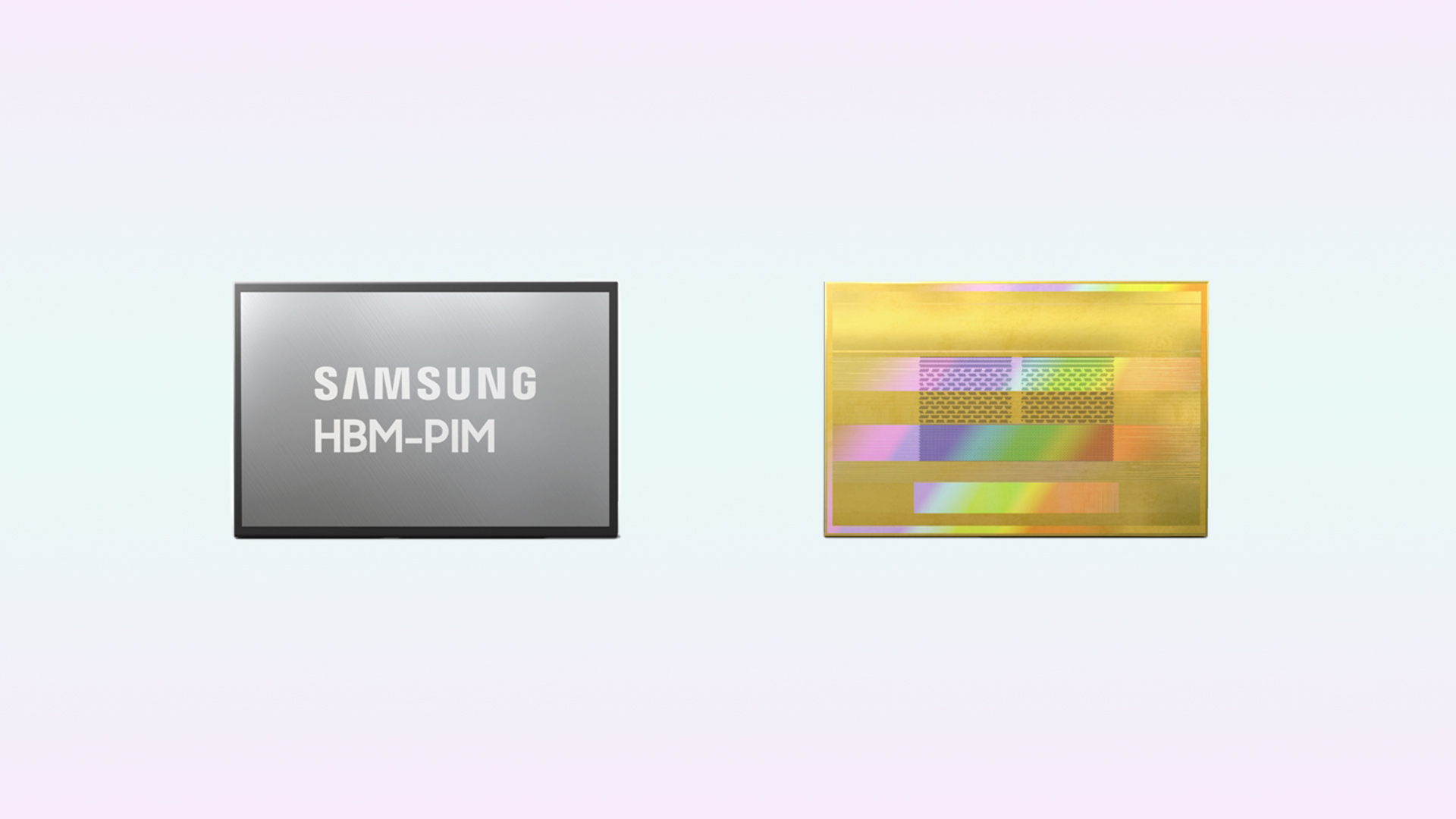

The South Korean firm's HBM2 Aquabolt memory chip, which was first launched in 2018, was used to develop the new HBM-PIM memory. The PIM part features advanced logic-based processing units, and it more than doubles the AI processing performance and reduces power consumption by 70%. The new memory chip from Samsung will mostly be used in high-performance computing servers and AI applications.

In the standard computer architecture, memory and processing are kept separate, which increases the latency when a lot of data is moved between them. To overcome those latency issues, Samsung created HBM-PIM. The new chip is also compatible with the previous-generation HBM interface, which means companies can set up AI accelerators without changing existing hardware and software.

Samsung mentioned in a press release that it will supply HBM-PIM chips to customers for testing and verification in the first half of 2021, and then work with clients to standardize the PIM platform across the ecosystem.